I have been writing performance test reports for almost a decade. Most often we get drained with plotting various graphs and charts for the performance test reports. We used to carefully adapt to certain compliances that were developed over time within the organization like colour coding, formats and others. I used to feel very good at end of the day in having a document that was informative, indicating the performance issues, proof points and recommendations for tuning. Over last decade, we got many tool features that help us save time and energy for analysis and report generation. Now, the activity of analysing and grading performance metrics, no longer required. Writing few lines of observations and recommendations on top of an automated report seems sufficient in most cases.

These transformations and recent trends on how performance reports are being assessed by stakeholders, motivated me to write this note. Performance testing is one of the most significant activity before production, hence it is very important that the test report reflects all the stakeholder needs and gives a view to how their contributions or expectations got validated.

As a practising performance engineer, it’s significant for one to understand the stakeholder’s roles while testing performance of an application and communicate effectively and efficiently through test analysis reports. I hope this note will help performance engineers use their time and energy appropriately and also be an effective contributor for the people around you.

(Quoting Wikipedia), “Analysis is the process of breaking a complex topic or substance into smaller parts to gain a better understanding of it.”

It is important to understand that “Smaller parts” are different for each “role” involved in the program. A typical performance report with Response Time Trends, Transactions Rate, Hits per Second, throughput and Resource utilizations plotted typically against concurrent users, will not help all stakeholders directly.

Most of the time, Performance engineers conduct a detailed analysis to indicate performance probable issues or improvements, but that takes a backseat in the project priority, and it leads to production issues, with reputation loss for the performance engineer(s). To avoid such issues, a performance engineer needs to communication with the stakeholder in their language and perspectives. Let me explain using a typical retail scenario as an example.

Let’s assume the objective is to understand whether an e-commerce application can generate ‘X’ typical number orders during peak hours. It is quite common that each “role” in the team, perceives it differently.

A performance engineer who is in action, might have chosen certain number items from the list provided; to generate orders, use search methods to select items, random selections while run time or a combination of all these. Tests will be conducted with scenarios and conditions that meets the requirements, with the client and server statistics collected, a typical test report would be shared. The performance test report would state and claim the ecommerce application met the goals of X order / hours, if no visible issues. Thereafter, it goes into sleep mode without much attention, until a day when an issue is seen at production.

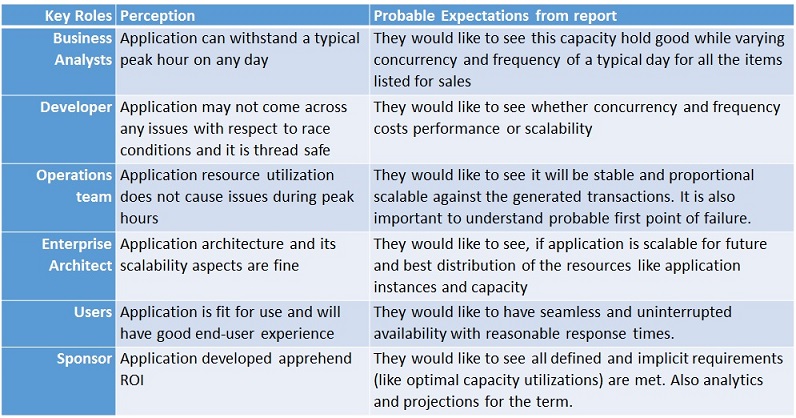

Let’s assess how each “role” might have perceived the information and what they would like understand out of the performance test and the report.

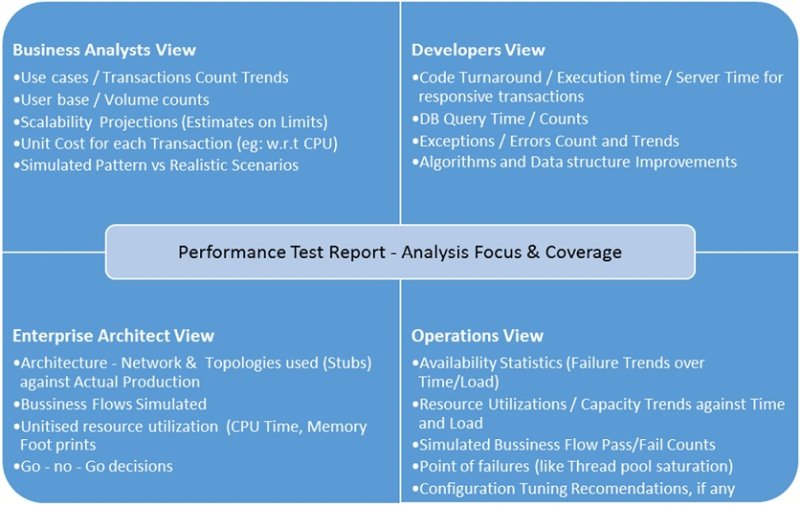

I would like to draw your attention to the following chart that will bring perspective on the coverage aspects of a test report.

I hope this would help you to build one of your own and set expectations across roles and gather feedback before you spend significant time preparing long reports for the audiences.

When a system goes into production, each stakeholder involved would have contributed in some way. As the performance test report review would be a key milestone towards the end of development cycle or release, it should bring up a relevant view to all “roles” involved to appreciate their contributions and suggest further improvements, if there any needed or possible.